Hi - newbie to the product.

I can successfully connect and download data. I want to download 12 fields for > 2000 RICs

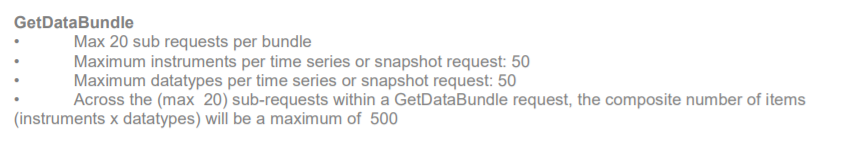

I have found that there is a limit on 50 RICs per request and as a limit

RICs x fields <= 100

So the best I can figure out is to chunk my list of RICs into lists of 8 and then call with for the 12 data fields (= 96 items total)

Is there a better way of doing this?

thanks

def chunker(seq, size):

return (seq[pos:pos + size] for pos in range(0, len(seq), size))

for group in chunker(df_rics['r'].to_list(), 8):

ds_data = ds.get_data(tickers=', '.join(group),

fields=['EPS1UP', 'EPS1DN', 'EPS2UP', 'EPS2DN', 'DPS1UP',

'DPS1DN', 'DPS2UP', 'DPS2DN', 'SAL1UP', 'SAL1DN', 'SAL2UP', 'SAL2DN'], kind=0, start=end_date.strftime("%Y-%m-%d"))

print(ds_data)